Link aggregation, also known as teaming, is a method of combining multiple network connections to create a single, higher-capacity logical connection. This is used to increase throughput, to provide redundancy in case one of the links fails, or both. There are different methods of link aggregation such as static link aggregation, dynamic link aggregation (802.3ad LACP), etc.

VMware vSphere (ESXi) supports link aggregation at the virtual switch level.

CONCEPTS

The key concepts of link aggregation with ESXi and ESX include:

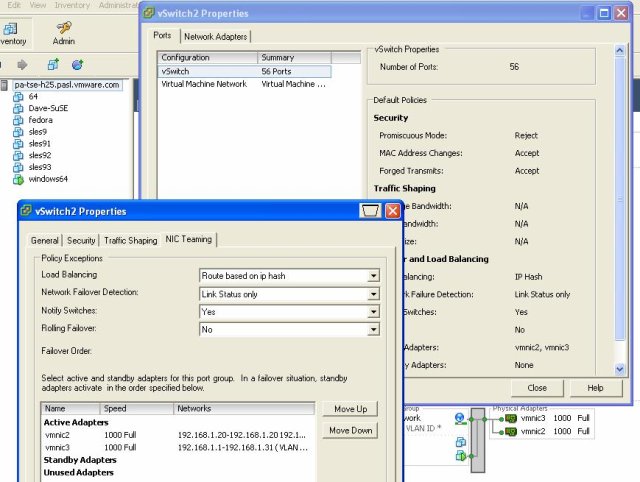

NIC Teaming: VMware ESXi/ESX allows you to team multiple NICs (Network Interface Cards) to form a single link-layer address. NIC teaming helps in load balancing and ensuring fault tolerance.

Load Balancing: Load balancing distributes network traffic across all physical NICs in a team. This distribution ensures that the network adapter utilization is used effectively.

Network Failover Detection: It detects a failure in the network. When a network adapter is disconnected due to network failure, another adapter takes over.

Failback: The failback option determines how a physical adapter is returned to active duty after recovering from a failure.

SCENARIOS

Here are the different scenarios where you might use link aggregation:

Increase bandwidth: By combining multiple links, you can increase the total bandwidth available between two devices.

Redundancy: If one of the links fails, traffic can continue to flow over the remaining links.

Load Balancing: Traffic can be distributed across multiple links, improving overall performance.

RECOMMENDATIONS

When considering link aggregation with VMware ESXi and Cisco equipment, you need to consider the following recommendations:

Compatibility: Make sure both ESXi and Cisco switch support the same link aggregation protocols.

Consistent Configuration: Ensure the same configuration on both ends of the aggregated links. Mismatched settings can cause poor performance or unexpected behavior.

Monitoring: Regularly monitor the link aggregation status.

LAG PROTOCOLS USE

For link aggregation between VMware and Cisco, two common protocols are used: Static EtherChannel and the Link Aggregation Control Protocol (LACP).

Static EtherChannel: This is a manual method to create a link aggregation group (LAG), and it doesn’t require any protocol negotiation between the switch and the server. In a static EtherChannel setup, all settings are manual, and it is up to the administrator to ensure the configuration matches on both ends of the EtherChannel. In VMware, this requires the load balancing policy “Route based on IP hash” to be configured on the vSwitch or distributed vSwitch.

LACP (802.3ad): This is a more dynamic protocol where the switch and the server can negotiate the formation of a LAG, which provides some additional verification and reliability over static EtherChannel.

Between these two, the choice of protocol can depend on your environment and needs. Static EtherChannel can be simpler to set up and doesn’t require a DVS. LACP can provide more robust link aggregation and error checking, but requires a DVS and can be a bit more complex to set up.

It is also important to keep in mind that the physical switch must also support the chosen protocol. Most Cisco switches support both static EtherChannel and LACP.

EXAMPLE

Below is an example of how you could set up link aggregation between an ESXi host and a Cisco switch:

1. Cisco Switch Configuration:

First, configure the Cisco switch. For this example, we’ll use a Cisco Catalyst switch and set up a static EtherChannel:

Switch# configure terminal Switch(config)# interface range GigabitEthernet 0/1 - 2 Switch(config-if-range)# channel-group 1 mode on Switch(config-if-range)# exit Switch(config)# interface Port-channel 1 Switch(config-if)# switchport mode trunk Switch(config-if)# switchport trunk allowed vlan all Switch(config-if)# exit

2. VMware ESXi Configuration:

Next, configure the vSphere client:

- Log in to the vSphere Client and select the ESXi host in the inventory.

- Go to the Configure tab and click on Networking.

- Click on Virtual switches and select the vSwitch associated with the NICs.

- Click on Edit settings.

- Under Teaming and Failover, select Route based on IP hash for Load Balancing.

- Check the network adapters that you want to include in the NIC team.

- Click OK.

Remember that the load balancing policy must be set to Route based on IP hash on the vSwitch when using static EtherChannel on the Cisco switch. The other NIC Teaming policies must be left at their default values.

Always test your configuration to ensure it’s working as expected. Failure to set up link aggregation properly can result in poor performance or a complete loss of connectivity.